Lead scraping is more than just collecting data; it's a strategic process that can fuel your entire sales and marketing engine. But without a clear framework, it's easy to waste resources on low-quality leads, violate platform terms, or even face legal challenges. The difference between a thriving lead pipeline and a blocked IP address often comes down to adhering to a core set of principles.

This guide outlines 10 essential lead scraping best practices that recruiters, sales professionals, and marketers must follow to build sustainable, high-quality contact lists. We'll move beyond generic advice to provide actionable strategies for everything from ethical compliance and data validation to modern automation workflows. You'll learn how to turn the web into your most powerful source of qualified leads—the right way.

We'll cover critical tactics like respecting a website's rules and behaving like a human user, as well as strategic workflows for data enrichment and secure storage. This listicle will equip you with a repeatable process for efficient and responsible data collection. We'll also explore how modern, no-code tools like ProfileSpider simplify these complex processes, enabling business users to focus on results, not technical hurdles. By implementing these practices, you can ensure your lead generation efforts are both effective and sustainable.

1. Respect Robots.txt and Terms of Service

The absolute first step in any ethical lead scraping process is to check the target website's rules. This foundational practice isn't just about being a good internet citizen; it's a critical compliance measure that protects your business from potential legal and technical penalties. Before extracting any data, you must review two key documents: the robots.txt file and the Terms of Service (ToS). The robots.txt file tells automated web crawlers which pages or sections of the site they are not allowed to access, while the ToS outlines the legal rules for using the site, often explicitly stating its policy on automated data collection.

Ignoring these guidelines is a significant risk. Many major platforms, like LinkedIn, strictly prohibit unauthorized automated scraping in their user agreements. Violating these terms can lead to your IP address being blocked or, in severe cases, legal action. This is why understanding the legal landscape of website scraping is so crucial for sustainable lead generation. By adhering to these rules, you ensure your lead scraping best practices are built on a solid, defensible foundation.

Practical Implementation

To put this into practice, always make a compliance check your first step. For example, GitHub’s robots.txt and ToS allow for the scraping of public profile information within certain limits, making it a viable source for tech recruiters. In contrast, many e-commerce sites may disallow scraping of pricing data to prevent competitor analysis.

- Actionable Tips for Compliance:

- Always Read First: Before launching any scraping tool, locate and carefully read the website's ToS, usually found in the site's footer.

- Check

robots.txt: Access this file by adding/robots.txtto the end of the root domain (e.g.,example.com/robots.txt). Look for "Disallow" directives to see which paths are off-limits. - Use Compliant Tools: Opt for solutions like ProfileSpider that are designed to operate within the permitted boundaries of public data extraction. This simplifies compliance and removes the guesswork for non-technical users.

- Document Everything: Keep a log of your compliance review for each data source. Note the date you checked the ToS and

robots.txtand summarize the relevant rules. This documentation is invaluable if your practices are ever questioned.

2. Implement Rate Limiting and Throttling

Beyond respecting formal rules, ethical scraping involves behaving like a considerate user rather than an aggressive bot. This is where rate limiting and throttling come in. These practices involve intentionally slowing down the speed of your data requests to avoid overwhelming a website's server. Hitting a site with hundreds of requests per second can degrade its performance for human users and will almost certainly trigger automated defense systems, leading to your IP address being blocked. Implementing a "polite" scraping speed is a core component of sustainable lead scraping best practices.

Many websites, especially large social networks and directories, have built-in rate limits that automatically block suspicious, high-frequency activity. For example, platforms like LinkedIn are notoriously sensitive to rapid, automated profile views. Attempting to scrape too quickly will result in temporary or even permanent restrictions. By throttling your requests, you mimic human browsing behavior, which significantly reduces the risk of detection and ensures you can continue collecting data long-term without interruption. This thoughtful approach protects both your access and the stability of the data source.

Practical Implementation

The ideal delay between requests varies depending on the target website's sensitivity. For instance, extracting business profiles from a public directory like Google Maps might work well with a 1-2 second delay between each request. In contrast, highly monitored platforms like Instagram or LinkedIn require much slower, more randomized intervals to avoid triggering their sophisticated anti-bot systems.

- Actionable Tips for Throttling:

- Start Conservatively: Begin with a generous delay, such as 2-3 seconds between requests. You can gradually decrease the delay to find the optimal speed that doesn't trigger blocks.

- Use Randomized Delays: Instead of a fixed interval (e.g., exactly 1 second), use a random delay between 1 and 3 seconds. This better simulates human behavior and is harder for anti-scraping systems to detect.

- Monitor Server Responses: Watch for error pages or messages. If you suddenly get blocked or see a "Too Many Requests" error, it's a clear signal that you need to slow down immediately.

- Choose Smart Tools: Modern solutions like ProfileSpider are designed with built-in politeness policies. They automatically manage request speeds to ensure stable and compliant data extraction, removing the manual guesswork for the user.

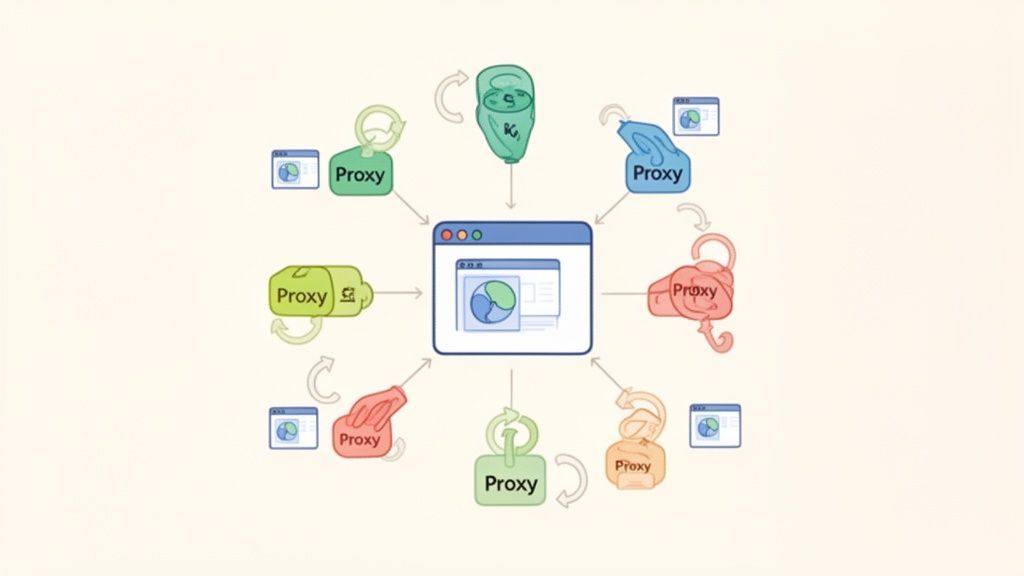

3. Use Rotating Proxies and User-Agent Strings

Even when operating within a website's rules, making a high volume of requests from a single IP address can trigger automated anti-bot systems. This is where using rotating proxies and user-agent strings becomes a crucial technical practice. This strategy involves routing your data extraction requests through a pool of different IP addresses (proxies) and cycling through various browser identifiers (user-agents) to mimic natural, human-like browsing behavior. By distributing your activity, you avoid raising red flags and prevent your primary IP from being rate-limited or permanently blocked.

Without this technique, large-scale scraping projects are almost guaranteed to fail. Websites are designed to detect and thwart automated traffic that could overload their servers or extract proprietary information. Effective proxy and user-agent management is a cornerstone of reliable and scalable lead scraping best practices, ensuring you can gather the necessary data without interruption. It makes your scraper appear as multiple distinct users accessing the site from various locations and devices, greatly reducing the chance of detection.

Practical Implementation

For business users, setting this up manually is a significant technical challenge involving proxy service subscriptions and complex configurations. For example, a recruiter scraping multiple job boards for candidate profiles would need to manage a proxy pool to avoid being blocked after a few hundred requests. Similarly, a marketer collecting influencer data must rotate both proxies and user-agents to bypass sophisticated security measures.

- Actionable Tips for Anti-Blocking:

- Choose the Right Proxy Type: Use residential proxies for high-security sites like LinkedIn, as they are less likely to be detected than datacenter proxies.

- Rotate User-Agent Strings: Maintain a list of current, real browser user-agent strings (e.g., recent versions of Chrome, Firefox, Safari) and rotate them with each request or session.

- Randomize Everything: Instead of cycling through proxies and user-agents sequentially, randomize their selection to better simulate human behavior.

- Simplify with a Managed Solution: For non-developers, managing this infrastructure is daunting. Tools like ProfileSpider handle proxy and user-agent rotation automatically in the background, allowing you to focus on lead generation without worrying about getting blocked.

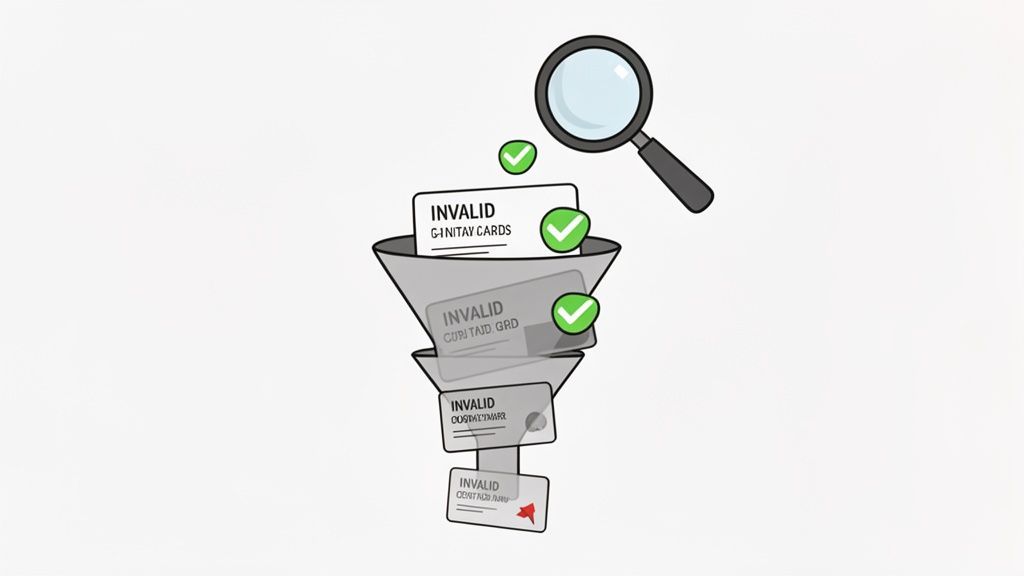

4. Extract and Validate Data Quality

Collecting lead data is only half the battle; the real value comes from its accuracy and usability. Implementing a systematic process for data verification and cleansing is a non-negotiable step in effective lead scraping. This practice involves rigorously checking extracted information for accuracy, completeness, and proper formatting before it enters your CRM or marketing automation systems. High-quality data prevents wasted resources on bounced emails, disconnected numbers, and misdirected outreach, ensuring your sales and marketing efforts are built on a reliable foundation.

Ignoring data quality can cripple your campaigns and damage your sender reputation. A database filled with incomplete, outdated, or duplicate records leads to inefficient workflows and poor decision-making. For instance, sending outreach to malformed email addresses results in high bounce rates, which can get your domain blacklisted by email providers. Prioritizing data validation is a core component of sustainable lead scraping best practices, ensuring that every lead you collect is an actionable asset rather than a potential liability.

Practical Implementation

To put this into practice, integrate data validation directly into your post-scraping workflow. For example, a sales team can validate newly extracted LinkedIn profiles against their existing CRM database to eliminate duplicates before import. Similarly, a marketing team can run a script to standardize all phone numbers to an international format, ensuring compatibility with their auto-dialer software. The goal is to create a clean, standardized, and enriched dataset ready for activation.

- Actionable Tips for Data Quality:

- Use Built-in Cleansing Tools: Leverage solutions like ProfileSpider, which includes features to automatically merge duplicate contacts and enrich profiles with missing emails and phone numbers, streamlining the validation process with a single click.

- Validate Email and Phone Formats: Before importing data, use simple checks or validation tools to confirm email addresses follow a valid structure (e.g.,

name@domain.com) and standardize all phone numbers. - Set Completeness Thresholds: Establish minimum data requirements for a lead to be considered "qualified." For example, you might discard any record that doesn't include at least a name, company, and valid email address.

- Enrich Critical Missing Data: Use data enrichment services to fill gaps in crucial fields like job titles, company size, or direct contact information. This step turns a partial record into a comprehensive lead profile.

5. Segment and Target High-Value Leads

Scraping data is only half the battle; the real value comes from transforming that raw information into actionable intelligence. This is where segmentation comes in. Rather than blasting a generic message to every lead, this practice involves organizing your extracted profiles into targeted groups based on specific criteria like industry, job title, company size, or location. Effective segmentation is a cornerstone of modern lead scraping best practices because it allows for highly personalized and relevant outreach.

This targeted approach dramatically increases the efficiency and effectiveness of your sales and marketing campaigns. By understanding the unique characteristics of each segment, you can tailor your messaging to address their specific pain points and needs, leading to higher engagement and conversion rates. Instead of a one-size-fits-all strategy, you create multiple, focused strategies that resonate deeply with each distinct audience, ensuring your efforts are concentrated on the most promising opportunities.

Practical Implementation

To implement segmentation effectively, you should begin organizing data the moment you extract it. For instance, a B2B SaaS company might extract profiles of marketing directors, then segment them by company revenue. This allows them to pitch a premium service package to directors at companies with over $10 million in revenue while offering a standard package to smaller businesses. Similarly, a recruiter can extract tech founders and segment them by their company's funding stage to tailor their outreach for specific hiring needs.

- Actionable Tips for Segmentation:

- Tag During Extraction: Use tags like

#Decision-Maker,#Startup, or#Enterpriseas you scrape profiles. This makes sorting and filtering your data significantly faster later on. - Create Buyer Personas: Use a tool’s custom list feature, like the one in ProfileSpider, to build dedicated lists for each of your key buyer personas. For example, create separate lists for "SaaS Founders" and "E-commerce VPs."

- Build Hierarchical Lists: Organize your leads in a structured way, such as

All Leads > By Industry > By Company Size > High Priority, to quickly drill down to your most valuable prospects. - Update Segments Regularly: The market is always changing. Revisit and update your segments at least monthly to remove outdated leads and re-categorize profiles based on new information, which helps you increase the value of your lists over time.

- Tag During Extraction: Use tags like

6. Maintain Local Data Privacy and Security

How you store extracted data is just as important as how you collect it. Relying on cloud services or third-party servers to hold scraped lead information introduces unnecessary security vulnerabilities and can compromise data privacy. The most secure approach is to store all extracted data locally on your machine, giving your organization complete control over sensitive prospect and customer information and reducing the risk of data breaches. This is a core component of responsible lead scraping best practices.

Storing data locally minimizes your attack surface. When data is transmitted to and from a cloud server, it creates multiple points of potential interception. By keeping the data on your own encrypted hard drive, you eliminate these intermediary risks. This method also helps ensure compliance with data protection regulations like GDPR, which mandate strict control over personal data. It guarantees that you are the sole custodian of the information, preventing unauthorized access and maintaining the integrity of your lead generation process.

Practical Implementation

Prioritize tools and workflows that are built for local data storage. For example, enterprise recruiting teams often maintain candidate databases on local machines to ensure full GDPR compliance and protect sensitive applicant data. Similarly, sales teams can scrape leads and export them directly to a secure, on-premise CRM system, completely bypassing vulnerable cloud storage intermediaries.

- Actionable Tips for Local Security:

- Choose Local-First Tools: Opt for solutions like ProfileSpider, which is designed with a privacy-first architecture. It stores all extracted profiles locally in your browser and never on its servers.

- Regularly Export and Back Up: Periodically export your scraped data to local formats like CSV or Excel and store these files in secure, encrypted folders on your computer or a private network drive.

- Isolate Browser Profiles: Create separate browser profiles for scraping activities to keep professional data isolated from personal browsing, reducing the risk of cross-contamination or accidental data leaks.

- Implement Access Controls: If scraped data is shared on a local network, ensure strict access controls are in place so only authorized team members can view or modify the files.

7. Enrich Data with Multiple Information Sources

Scraping data from a single source often provides an incomplete picture. A lead might have a name and title on a company website but lack an email address, or a GitHub profile may list a Twitter handle but not a full name. This is where data enrichment, the process of augmenting your initial data with information from other sources, becomes a critical best practice. By combining and cross-referencing data points from multiple websites, you can build comprehensive, highly valuable lead profiles. This multi-source approach dramatically increases the utility of your scraped data for outreach and analysis.

A well-enriched lead profile moves beyond basic contact details to include professional history, social media presence, and other contextual information. This transforms a simple name into a qualified lead, enabling personalized communication and more effective campaign segmentation. For anyone serious about lead scraping best practices, enriching data is not just an add-on; it's a fundamental step for maximizing the value of your efforts and achieving a higher return on investment.

Practical Implementation

To implement this, you must develop a workflow that systematically pulls and merges data. For example, you could start by scraping a list of speakers from an event website to get their names and company affiliations. Next, you can use those details to find their LinkedIn profiles to collect job titles and professional experience. Finally, you can search for their company's "About Us" page to find a direct email address, creating a complete and actionable record.

- Actionable Tips for Enrichment:

- Define a Master Record: Create a standard template for your ideal lead profile, outlining all the fields you want to populate (e.g., Full Name, Title, Company, Email, LinkedIn URL, Twitter Handle).

- Prioritize Data Sources: Rank your sources by reliability. A corporate website is often the most accurate source for a job title, while LinkedIn is excellent for career history.

- Automate with Smart Tools: Use tools like ProfileSpider, which can be configured to automatically visit detail pages found during an initial scrape. This one-click workflow captures missing information like phone numbers or emails from secondary pages without manual effort.

- Merge and Deduplicate: After collecting data from various sources, use a tool’s merge functionality or a simple spreadsheet formula to consolidate duplicate entries. This ensures you have one master record per lead, combining the best information from all sources.

- Document Your Sources: For each piece of data in your master record, note where it came from. This creates an audit trail, helping you verify information and assess the reliability of your sources over time.

8. Automate Export and CRM Integration Workflows

Collecting lead data is only half the battle; its real value is unlocked when it's integrated into your business systems. Manually transferring this information from your scraping tool to a CRM, Applicant Tracking System (ATS), or email marketing platform is tedious, prone to human error, and a significant bottleneck. Automating your export and integration workflows is a crucial lead scraping best practice that ensures data is actionable, timely, and consistently formatted, accelerating your entire sales or recruitment cycle.

This practice involves setting up seamless pathways for extracted data to flow directly into the software you use daily. By eliminating manual copy-pasting, you not only save countless hours but also minimize the risk of data entry mistakes that can lead to incorrect contact details or lost opportunities. Modern tools are designed to facilitate this, turning a multi-step administrative task into a streamlined, one-click process that gets leads into your pipeline faster.

Practical Implementation

The goal is to make the journey from data extraction to outreach as short as possible. For instance, a sales team can scrape a list of conference attendees and, within minutes, have that list segmented and imported into HubSpot for a targeted follow-up campaign. Similarly, a recruiter can export a list of qualified candidates from a professional network directly into their ATS like Greenhouse or Workday, ready for the next stage of the hiring process.

- Actionable Tips for Automation:

- Use a Tool with Built-in Exports: Choose a solution like ProfileSpider that offers direct bulk export capabilities to formats like CSV, Excel, and JSON. This simplifies importing data into any major CRM or business application.

- Map Fields Before Exporting: To ensure data lands in the right place, use the notes or custom field features in your scraper to label information. For example, add a note like "Source: TechCrunch Article" to a list before exporting, so it can be mapped to a "Lead Source" field in Salesforce.

- Choose the Right Format: Use CSV for standard spreadsheet and CRM uploads. Opt for JSON if you're connecting the data to a custom application or need a more flexible format for technical integrations.

- Perform a Small Test Run: Before exporting thousands of leads, test the process with a small sample of 5-10 contacts. Verify that all fields import correctly into your target system to prevent large-scale errors.

9. Monitor and Respect Website Changes and Blocks

Websites are not static; they are constantly evolving with code updates, redesigns, and enhanced security measures. A crucial element of effective lead scraping best practices is building an adaptive process that anticipates and responds to these changes. Continuously monitoring your scraping performance is essential for maintaining long-term data access and respecting the target website's efforts to manage its server load and prevent bot abuse. When you encounter blocks, CAPTCHA challenges, or structural errors, it's a signal to adjust your strategy, not to force your way through.

This adaptive approach ensures the sustainability of your lead generation efforts. Ignoring these signals can lead to permanent IP bans and a damaged reputation, rendering a valuable lead source useless. By treating website changes and blocks as feedback, you can refine your scraping techniques to be more efficient and less intrusive, preserving your ability to gather data ethically over the long run.

Practical Implementation

Successful monitoring involves a combination of automated alerts and manual reviews. When a website redesigns its user interface, for example, the rules your scraper uses to find data like names and job titles will break. An adaptive strategy involves quickly identifying these breaks and updating your extraction rules to match the new structure, minimizing data collection downtime.

- Actionable Tips for Adaptation:

- Set Up Failure Alerts: If your scraping tool fails to extract data from a significant number of profiles (e.g., 5-10%), it’s a clear sign that the site structure has likely changed.

- Log and Analyze Blocks: When your scraper is blocked or encounters a CAPTCHA, document the page, the time, and the likely reason. Use this log to identify patterns and adjust your approach, such as using different proxies or reducing your request frequency.

- Simplify with Modern Tools: Instead of manually updating code when a website changes, use a tool like ProfileSpider. Its AI-powered engine automatically adapts to most site structure changes, saving you the headache of constant maintenance.

- Maintain a Changelog: Keep a detailed record of any modifications you make to your scraping process. This documentation is invaluable for troubleshooting and helps your team understand how the scraping logic has evolved in response to website updates.

10. Document and Audit Data Collection Processes

Treating your lead generation activities like a formal business operation is crucial for long-term success and compliance. This means maintaining comprehensive records of all lead scraping activities, including data sources, extraction dates, field mappings, and the personnel involved. This documentation isn't just administrative busywork; it's a critical practice that ensures regulatory compliance, enables internal auditing, and creates clear accountability for data handling from acquisition to use.

A well-documented process serves as your operational playbook and your first line of defense if your data practices are ever questioned. It provides a clear, traceable history of where each lead came from, when it was acquired, and what rules were followed. In an era of increasing data privacy regulations like GDPR and CCPA, demonstrating this level of diligence is non-negotiable and a core component of ethical lead scraping best practices.

Practical Implementation

Start by creating a master log or spreadsheet for every scraping project. This central document should be the single source of truth for your data collection efforts. For instance, before scraping a professional networking site for sales leads, you would first log your ToS compliance review and then record the date, the specific search query used, the number of profiles extracted, and where the resulting data was stored. This simple habit transforms a one-off task into a repeatable, auditable process.

- Actionable Tips for Compliance:

- Create a Master Log: Use a spreadsheet to track:

Date,Data Source,Profiles Extracted,Compliance Notes (ToS/Robots.txt), andData Destination. - Log User Access: Document who accessed the lead data, when it was exported, and which systems (e.g., CRM, marketing automation platform) it was sent to.

- Utilize Tool Features: In tools like ProfileSpider, use built-in notes or tagging features to attach context directly to your scraped profiles, such as the extraction date and source campaign.

- Schedule Regular Audits: Implement quarterly reviews of your documentation and extraction practices to ensure they remain consistent, compliant, and aligned with your internal policies.

- Create a Master Log: Use a spreadsheet to track:

Lead Scraping Best Practices — 10-Point Comparison

| Item | 🔄 Implementation complexity | ⚡ Resource requirements | 📊 Expected outcomes | ⭐ Ideal use cases | 💡 Key advantages / Tips |

|---|---|---|---|---|---|

| Respect Robots.txt and Terms of Service | Low — manual review per site | Minimal — time for policy checks & documentation | Compliance, reduced legal risk, fewer bans | Any scraping project requiring legal safety | Avoids legal liability; read ToS and document compliance |

| Implement Rate Limiting and Throttling | Medium — tuning delays and backoff logic | Low–Medium — monitoring and scheduler resources | Fewer rate blocks, stable long-term scraping | High-volume sites or sensitive servers | Prevents overload; start conservative, randomize delays, watch for errors |

| Use Rotating Proxies and User-Agent Strings | Medium–High — proxy management & rotation logic | High — proxy service costs and maintenance | Lower detection risk, geo access, bypass simple IP limits | High-security or geo-restricted sites (e.g., LinkedIn) | Reduces blocking; use residential proxies for strict sites and rotate UAs |

| Extract and Validate Data Quality | Medium — validation rules and enrichment workflows | Medium — processing time, possible third‑party services | Higher accuracy, fewer bounces, improved CRM quality | CRM imports, outreach, recruiting pipelines | Improves deliverability; validate emails/phones and dedupe before export |

| Segment and Target High-Value Leads | Medium — requires structured data and rules | Low–Medium — tagging and list management effort | Better conversion rates, focused outreach | Account-based marketing and targeted campaigns | Enables personalization at scale; tag during extraction and refresh segments |

| Maintain Local Data Privacy and Security | Low–Medium — local storage setup and backup routines | Low — local storage, requires export/backup discipline | Strong privacy/compliance, full control over data | Enterprises with strict governance (GDPR/CCPA) | No cloud exposure; export regularly, encrypt backups, train users |

| Enrich Data with Multiple Information Sources | High — merging, matching, and field reconciliation | High — access to sources, enrichment APIs, deduplication | More complete profiles, improved qualification & context | Deep research, high-value lead scoring, detailed prospecting | Increases match rates; prioritize reliable sources and record source provenance |

| Automate Export and CRM Integration Workflows | Medium — field mapping and API/config setup | Medium — API credentials, testing, occasional maintenance | Faster time-to-outreach, fewer manual errors | Large teams needing CRM/ATS sync and scheduled exports | Saves manual work; test with samples, map custom fields, use correct export format |

| Monitor and Respect Website Changes and Blocks | Medium–High — monitoring, error handling, CAPTCHA responses | Medium — alerting, logging, maintenance effort | Fewer disruptions, adaptive scraping, longer uptime | Long-running or mission-critical scraping systems | Set failure alerts, track changes, use smart tools that auto-adapt |

| Document and Audit Data Collection Processes | Low–Medium — consistent logging and retention policies | Low — time to record and store audit data | Regulatory compliance, traceability, accountability | Regulated industries and enterprise teams | Maintain extraction timestamps/sources; keep audit trails and compliance checklists |

Implement Your Strategic Scraping Framework Today

The journey through the intricacies of modern lead generation reveals a clear truth: effective lead scraping is far more than just data extraction. It is a strategic discipline that requires a delicate balance of technical precision, ethical responsibility, and operational efficiency. Moving beyond basic, haphazard collection methods is no longer optional; it's a fundamental requirement for building a sustainable, high-quality sales pipeline. By now, you understand that the foundation of successful lead scraping rests on a framework built from multiple, interconnected pillars.

We've explored the non-negotiable importance of ethical and legal compliance, starting with respecting robots.txt and Terms of Service. We've delved into the technical nuances of being a good digital citizen, such as implementing rate limiting and throttling to avoid overwhelming servers. You’ve also learned how to navigate a complex digital landscape by using rotating proxies and user-agent strings to maintain access without causing disruption. These practices are not just about avoiding blocks; they are about establishing a responsible and sustainable data collection methodology.

From Raw Data to Actionable Intelligence

Mastering the technical side is only half the battle. The true value emerges when you transform raw data into actionable intelligence. This is where the core lead scraping best practices we've discussed truly shine. The process starts with a relentless focus on data quality and validation, ensuring every email, phone number, and profile link is accurate and usable. It continues with strategic segmentation and targeting, allowing you to focus your efforts on high-value prospects who are most likely to convert.

Furthermore, we highlighted the critical need for robust internal processes, including:

- Maintaining Local Data Privacy and Security: Protecting collected data is just as important as collecting it legally. Secure storage protocols and access controls are essential.

- Enriching Data with Multiple Sources: A single data point is often not enough. Augmenting initial scrapes with additional context from other platforms turns a simple contact list into a rich, detailed prospect profile.

- Automating Export and CRM Integration: Manual data entry is a bottleneck. Seamless workflows that push validated, enriched leads directly into your CRM are key to maximizing speed and efficiency.

- Documenting and Auditing Processes: Maintaining a clear record of your data sources, methods, and compliance checks ensures accountability and helps you adapt to future changes.

The Modern Approach: Bridging Strategy and Execution

Implementing this comprehensive framework can seem daunting, especially when relying on traditional scripts or complex software. The constant maintenance, debugging, and legal monitoring required can easily consume more resources than the leads are worth. This is precisely why modern, no-code solutions have become a game-changer for sales, marketing, and recruiting professionals.

Key Takeaway: The goal is not just to scrape data, but to build an intelligent, compliant, and automated lead generation engine. The right tools abstract away the technical complexity, allowing you to focus entirely on strategy and outreach.

Tools like ProfileSpider are built from the ground up to embody these best practices. It's designed for the business professional, not the developer. It handles the complexities of ethical scraping, data validation, and profile enrichment behind the scenes with a simple, one-click interface. Instead of wrestling with custom scripts or managing proxy lists, you can focus on defining your ideal customer profile and letting the AI-powered engine deliver clean, enriched, and ready-to-use leads directly into your workflow.

Your next step is to move from theory to practice. Review the ten best practices we’ve covered and assess your current process. Identify the gaps, whether in compliance, data quality, or workflow automation. Then, embrace a solution that empowers you to close those gaps effortlessly. Stop spending your valuable time on tedious manual tasks and start building a powerful, scalable lead generation system today.