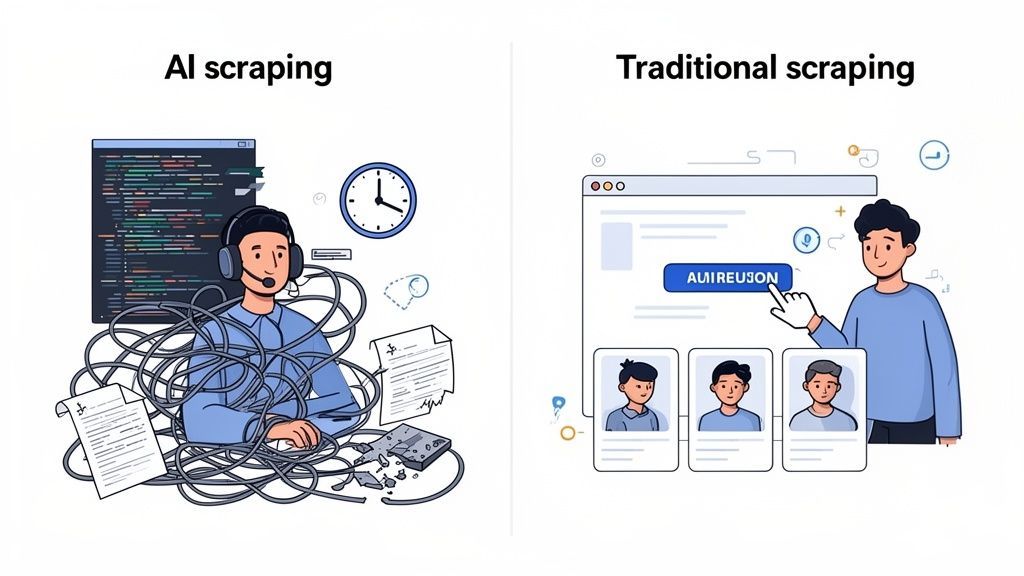

When you get right down to it, the difference between AI scraping and traditional scraping is a classic tale of adaptability versus rigidity.

Traditional scraping is built on hard-coded rules. If a website’s layout changes—even slightly—the scraper breaks. On the other hand, AI scraping is smart enough to understand a page's context. It adapts on the fly, which makes it far more resilient, especially for those of us who aren't developers.

Understanding the Core Differences

Sales pros, recruiters, and marketers all need the same thing: accurate data, and fast. But picking the wrong way to get it can be a massive headache. Your choice between AI and traditional methods will directly impact your efficiency, your budget, and whether you get those crucial leads or candidate profiles without constant technical fires to put out.

A Tale of Two Methods

Think of traditional scraping like following a very precise, unchangeable recipe. A developer writes a script targeting specific HTML elements, like, "find the job title in the third <div> with the class name 'job-info'." If the website owner redesigns the page and that class name vanishes, the recipe fails. The script is broken.

Now, imagine AI scraping as asking a skilled assistant to find that same job title. The AI doesn't get hung up on the specific code; it looks for visual and contextual clues. It sees text that looks like a job title, located near what is clearly a person's name and company. When the website's layout changes, the assistant can still find what you need because it understands the meaning of the data, not just its location in the code.

This is the fundamental reason modern, no-code tools like ProfileSpider are built on AI. It gives sales and recruiting professionals the power to pull profile data with a single click, leaving the fragile, code-heavy workflows of the past behind.

To make this even clearer, here's a quick side-by-side comparison.

AI Scraping vs. Traditional Scraping at a Glance

This table breaks down the fundamental differences between the two data extraction methods at a high level.

| Attribute | Traditional Scraping | AI Scraping |

|---|---|---|

| Method | Relies on fixed HTML selectors (XPath/CSS) | Uses AI to understand page layout and context |

| Flexibility | Brittle. Breaks with minor website updates. | Adaptive. Handles layout changes automatically. |

| Maintenance | High. Requires constant developer intervention. | Low. "Set it and forget it" for users. |

| Technical Skill | Requires coding knowledge (Python, etc.). | No-code. Designed for non-technical users. |

| Ideal For | Developers scraping simple, static websites. | Business users needing data from diverse sources. |

Ultimately, for anyone who isn't a coder but needs reliable data from dynamic places like professional networks or company directories, the advantages of an AI-driven approach are crystal clear. It turns data collection from a recurring technical problem into a simple, productive business task.

How Traditional Scraping Works: A Code-Heavy Approach

If you're in sales, recruiting, or marketing, the old way of scraping the web is a deeply technical, often frustrating process that lives entirely in code. It’s not something you can just pick up one afternoon; it demands specialized skills and a developer on standby. The whole method is built on a set of rigid, hard-coded rules.

This approach is like giving someone a map with a single, unchangeable route drawn on it. If a road closes or a landmark moves—or in this case, a website updates its layout—that map becomes instantly useless. Data collection stops cold, and your lead gen or candidate sourcing grinds to a halt.

The Manual and Technical Workflow

It all starts with a developer manually inspecting a website’s HTML source code. Their job is to find the exact "address" for each piece of data you want, like a name, job title, or company. In developer-speak, these addresses are called CSS selectors or XPath locators.

Once they've pinpointed these locators, the developer has to write a custom script, usually in a language like Python using libraries such as BeautifulSoup or Scrapy. This script contains explicit instructions: "Go to this specific webpage, find the HTML element with the class name 'profile-name,' and pull the text inside."

This highly specific, rule-based logic is the fundamental weakness of the traditional method.

The moment a website’s developer tweaks the HTML—changing a class name from

profile-nametouser-name, for example—the scraper’s instructions are suddenly wrong. The script breaks, and the data stops flowing until a developer can go back in and manually fix the code.

The Constant Cycle of Maintenance

This brittleness creates a massive bottleneck for business users. What looks like a minor website update can completely derail your data workflow, often without any warning. It kicks off a frustrating and expensive cycle:

- Script Breaks: A target website gets a routine update, and your scraper stops working.

- Data Flow Stops: Your team is suddenly cut off from the leads, profiles, or market data they rely on.

- Developer Intervention: You have to pull a developer off other projects to figure out what broke and rewrite parts of the script.

- Repeat: The script works again... but only until the next website change, which starts the whole cycle over.

This constant need for maintenance is where the hidden costs of traditional scraping really pile up. It’s not just about the initial development, but the ongoing expense of repairs and the lost productivity while you're waiting for a fix. If you want to see just how code-heavy this is, you can learn how to build a simple web scraper with Python and export to CSV and get a feel for the technical side.

Ultimately, this reliance on static code makes traditional scraping an inefficient choice for non-technical users who just need reliable data from the dynamic, ever-changing websites where the best leads and candidates live.

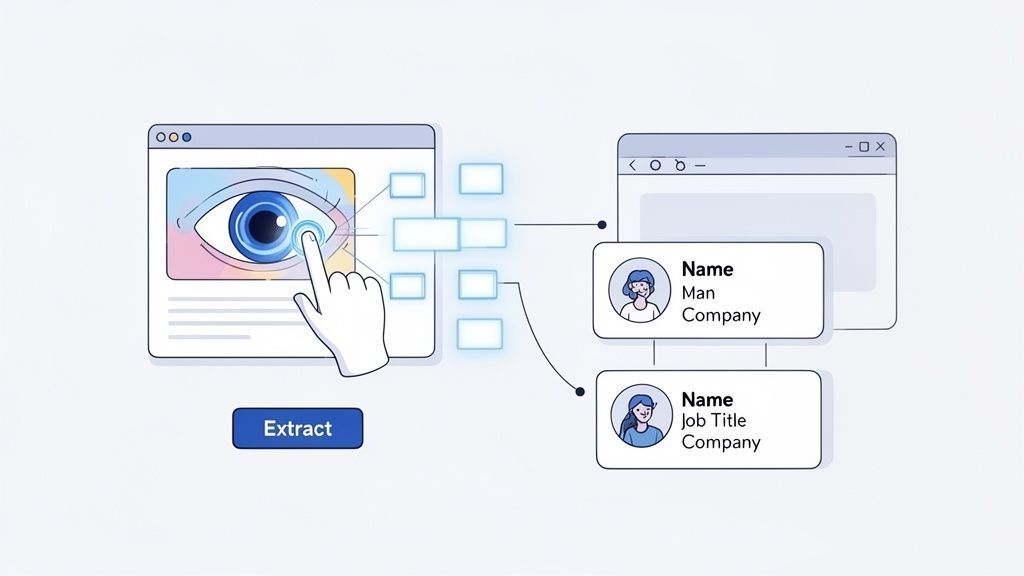

How AI Scraping Works: More Human, Less Code

Forget everything you know about old-school scraping. Traditional methods get tangled up in a website's underlying code, making them brittle and complicated. AI scraping is a completely different beast. It looks at a webpage the way you and I do—visually. It understands the layout and the context.

This is the secret sauce. An AI profile extraction tool doesn't need to be told that a specific bit of HTML is a "name." It recognizes it by its relationship to other data. It sees a name, a job title right next to it, a company, and contact details all clustered together and just knows that's a profile. This is what makes modern no-code tools possible.

The No-Code Workflow in Action

This is where a modern no-code scraping tool like ProfileSpider really comes into its own. For anyone in recruiting, sales, or marketing, the workflow is ridiculously simple. You can finally cut the developer out of the loop.

Here’s what it actually looks like:

- Go to a page you want to scrape—a LinkedIn search, a list of conference speakers, or a company's team page.

- Click a button to activate the tool.

- The AI scans the page, identifies every single profile, and pulls the data into a perfectly structured list.

That’s it. No messing with CSS selectors, no writing scripts, no configuration. The AI does all the heavy lifting. This visual, context-based approach makes it incredibly resilient to website updates and a total game-changer for anyone who isn't a programmer. It turns lead generation from a technical headache into a simple point-and-click task. If you want to get into the nitty-gritty, we break down exactly how an AI scraper works in our detailed guide.

An AI scraper doesn't just read code; it interprets context. This is the fundamental difference, and it's why it can handle website changes that would instantly break a traditional script.

Proven to Be More Efficient

This shift from rigid rules to contextual understanding has a massive impact on day-to-day business. In the fast-moving world of sales and recruiting, AI-powered scraping delivers a whole new level of efficiency.

A global retail company, for instance, saw an 80% reduction in manual effort after making the switch. AI scrapers effortlessly handle dynamic, JavaScript-heavy sites, pulling data from product pages and social media without skipping a beat when the layout changes.

This resilience is the key. When a website update breaks a traditional scraper, you have to call in a developer to fix it. An AI tool just adapts. For sales and recruiting teams, that means less downtime, more consistent data, and more time spent actually talking to people and closing deals. When you put ai scraping vs traditional scraping side-by-side, the winner is clear for any professional who values speed and reliability.

How Business Users Should Compare Scraping Methods

When you're weighing AI against traditional scraping, the decision really comes down to a few key things that will make or break your team's workflow. For anyone in sales, recruiting, or marketing, this isn't just a technical discussion—it’s about which method gets you clean, usable data without the headaches.

Let’s get practical and look at the real-world differences in accuracy, scalability, and maintenance that business users run into every single day.

Accuracy: The High Cost of Small Errors

In data extraction, accuracy is everything. A traditional scraper is built on a rigid set of rules, which means it can easily start grabbing the wrong information if a website's layout changes, even a tiny bit.

Picture a script built to pull job titles from online profiles. If a site suddenly adds a "previous job title" field right above the current one, your scraper might start collecting outdated info without anyone noticing. That leads directly to poorly targeted outreach and a whole lot of wasted time.

This is where an AI profile extraction tool has a huge advantage. It doesn't just see code; it understands context. It knows that "Current Role" is what you want because it's right next to the current company name and recent employment dates. This kind of contextual awareness stops the silent, costly errors that plague rule-based scripts, making sure your data is consistently on point.

An AI scraper’s ability to tell the difference between a current job title and a past one based on context is a game-changer. It avoids the kind of quiet, expensive mistakes that traditional scrapers are famous for making after small site updates.

Scalability: Keeping Up with a Diverse Digital World

The real battle between AI and traditional scraping is fought on the field of scalability. For a business user, this isn't about servers and processing power. It’s about how easily you can point your tool at a new website and get the data you need without having to start over from scratch every time.

Traditional scraping does just fine when you're hitting thousands of pages on a single, unchanging website. But the second you need to grab profiles from multiple places—say, a professional network, a company’s “About Us” page, and a conference speaker list—you hit a wall. Each new source demands a brand-new, custom script. That process is slow, expensive, and just doesn't scale.

AI, on the other hand, is built for this kind of variety. Today's websites are dynamic and complex, and that's where AI shines. A tool like ProfileSpider uses a single AI engine that can understand and pull data from just about any website, no matter how it’s built. As we head into 2025, industry reports suggest that rule-based scripts can fail 70-80% of the time after a site update, whereas AI tools just adapt. You can see more on AI's adaptability over at ScrapeGraphAI.

What this means for you is simple: you can scale your lead generation or recruiting efforts across the entire web without ever having to put in a ticket with a developer.

Maintenance: The Hidden Tax of Rigid Rules

The constant need for maintenance is probably the biggest, most frustrating difference between ai scraping vs traditional scraping. A traditional scraper is a high-maintenance piece of tech. It’s like owning a classic car—it’s impressive when it works, but it feels like it's always in the shop.

Imagine this all-too-common scenario for a recruiter: you set a script to run overnight to find candidates, only to wake up to a blank spreadsheet. The target website pushed a minor update, and your script completely broke. Now, all your sourcing is on pause until a developer can figure out what went wrong, rewrite the code, and get it running again. This endless cycle of breaking and fixing is a massive drain on your time and budget.

AI scraping is designed to be a "set it and forget it" solution for business users. Since the AI automatically adapts to layout changes, the tool just keeps working. It keeps delivering leads and profiles without interruption. This resilience gets rid of the maintenance bottleneck and lets your team do what they're actually paid to do: talk to prospects and candidates.

If you want to dig deeper into the rules and best practices around data gathering, it's worth taking a look at whether website scraping is legal, as this can definitely shape your strategy.

For non-technical users, it's a clear choice. The combination of low maintenance, high accuracy, and effortless scalability makes AI-powered tools the obvious way forward for any modern business.

Detailed Feature Comparison: Traditional vs. AI Scraping

To really see the differences side-by-side, it helps to break down how each approach stacks up against the features that matter most to business teams. The table below cuts through the noise and gives a clear picture of what you can expect from each method.

| Feature | Traditional Scraping (Rule-Based) | AI Scraping (Context-Based) |

|---|---|---|

| Accuracy | High on static sites, but brittle. Fails silently on layout changes. | Consistently high. Understands context to avoid errors from site updates. |

| Scalability | Poor. Requires a new, custom script for each new website structure. | Excellent. A single AI engine works across virtually any website. |

| Maintenance | Constant. Scripts break frequently, requiring developer intervention. | Minimal to none. The AI adapts automatically to most site changes. |

| Initial Setup | Slow & technical. Requires a developer to write and test custom code. | Fast & simple. Usually a one-click process for non-technical users. |

| Cost | High hidden costs due to development, maintenance, and downtime. | Predictable subscription model. Lower total cost of ownership. |

| Anti-Bot Resistance | Easily detected and blocked due to predictable, robotic patterns. | More human-like interaction patterns make it harder to detect and block. |

| Ideal Use Case | Scraping a large volume of data from a single, stable, simple website. | Sourcing data from multiple, diverse, and dynamic websites. |

| Best For | Technical teams with dedicated developer resources for a specific project. | Sales, marketing, and recruiting teams needing reliable data without IT help. |

This breakdown makes it pretty clear. While traditional scraping has its place for very specific, controlled tasks, the flexibility and resilience of AI scraping make it a far more practical and powerful tool for the day-to-day needs of a growing business.

Choosing the Right Tool for Your Business Goals

So, how do you decide between AI scraping vs. traditional scraping? It really just comes down to one simple question: what are you trying to accomplish? Your role, your team's technical resources, and the kind of websites you're targeting will all point you toward the right tool. The whole idea is to match the tool to the task, not the other way around.

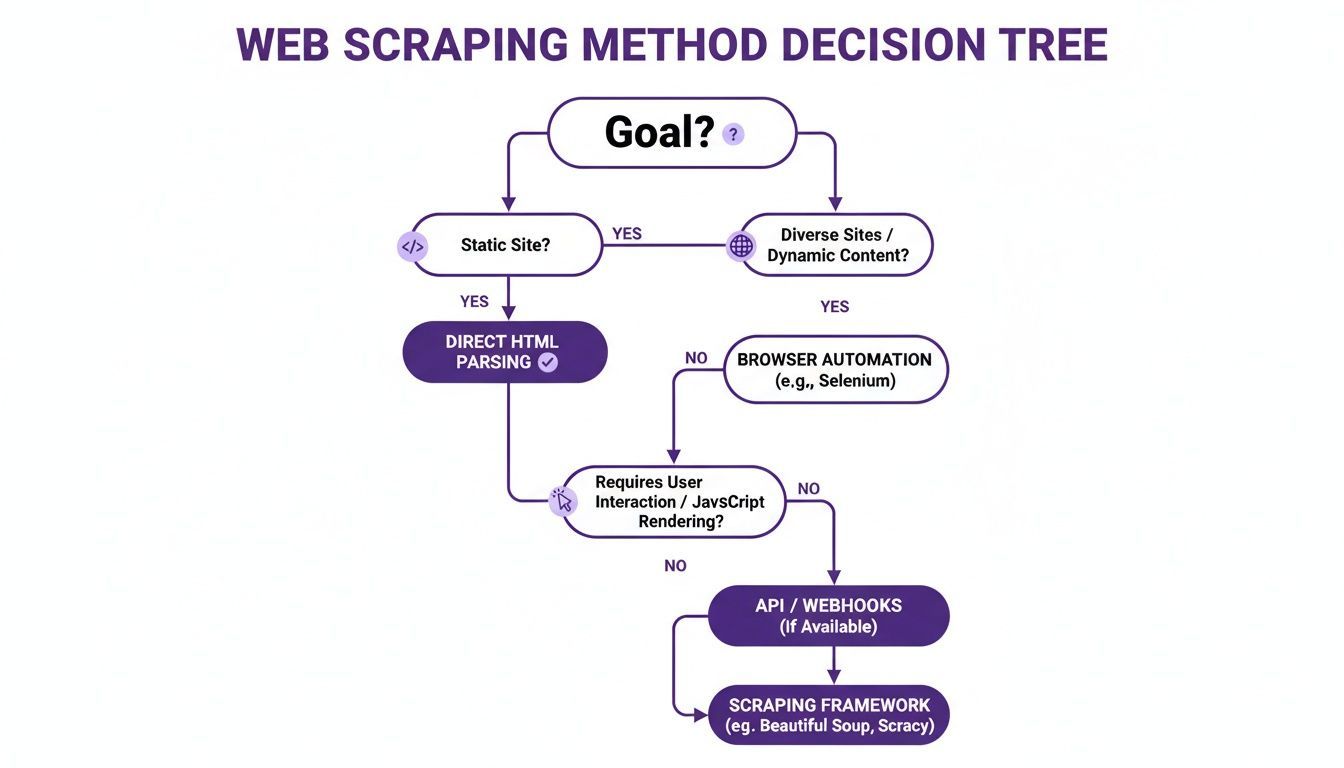

This decision tree gives you a quick visual on the best path to take based on your main data collection goals.

As you can see, the choice gets pretty clear once you define your project. If you're hitting static, uniform sites, traditional methods can work. But for dynamic, complex targets, AI is the obvious way to go.

When Traditional Scraping Makes Sense

Even with all its limitations, traditional scraping still has its place. It's mainly a good fit for people with specific technical skills who are working on well-defined, stable projects.

You should think about a traditional, code-based approach if you check these boxes:

- You're a Developer: You know how to write, deploy, and maintain your own custom scripts in languages like Python.

- Your Target is Simple and Static: You’re pulling data from a website with a basic, unchanging HTML structure.

- Your Data Points are Uniform: You need to grab one or two specific things (like a product price) from thousands of pages that all share the exact same layout.

In these very controlled situations, a custom script can be a direct and efficient way to get the job done. But for most business professionals, this kind of workflow is just too technical and fragile for things like daily lead generation or recruiting.

When AI Scraping Is the Clear Winner

AI scraping was built to solve the exact headaches that sales pros, recruiters, and marketers deal with every day. It's designed to ditch the technical roadblocks and focus on getting results quickly and reliably.

You should reach for an AI profile extraction tool if this sounds like you:

- You're a Non-Technical Professional: You work in sales, recruiting, marketing, or research and need data without having to write a single line of code.

- You Target Diverse and Dynamic Websites: Your leads and candidates are scattered all over the place—on professional networks, company "About Us" pages, event lists, and online directories.

- You Prioritize Speed and Resilience: You can't afford to have your work grind to a halt because a script broke. You need a tool that just adapts to website changes automatically and keeps delivering results.

For business users, the goal isn't to build a scraper; it's to build a list of qualified leads or candidates. AI-powered tools cut directly to the outcome, eliminating the technical process entirely.

Cost and maintenance also tell a completely different story. Traditional scraping can look cheap for simple jobs, but the costs skyrocket as things get more complex. AI scraping flips this script completely for dynamic sites, proving to be more cost-effective because it's so much faster to set up and requires way less upkeep. In fact, because AI adapts automatically, it can slash the need for manual fixes by up to 80%. You can dig deeper into the AI versus conventional web scraping cost dynamics to see a full breakdown of the numbers.

For this group, an intelligent no-code scraping tool like ProfileSpider is the perfect solution. It’s built from the ground up for the way business professionals actually work, offering a one-click process that requires zero technical skills. With features like dual person and company profile detection, it pulls comprehensive data from any source you throw at it. Most importantly, its local-first privacy model means you’re always in full control of your data, making it a safe and powerful choice for modern lead generation and talent sourcing.

Getting Started with AI Scraping in Minutes

Let's move past the theory. Getting your hands dirty with AI scraping is a lot easier than you might think. Forget the code-heavy, complex setup of traditional methods; AI-powered tools are built to be picked up by any business professional, fast.

This isn't just marketing fluff. To show you what I mean, we'll walk through the process using ProfileSpider. This simple example really drives home the core difference in the ai scraping vs traditional scraping debate—it’s all about simplicity.

A Practical Walkthrough

Honestly, the whole thing takes less time than making a cup of coffee. It’s a dead-simple, three-step workflow that requires zero technical background. None.

Here’s the entire process:

- Install the Tool: First thing's first, add the free ProfileSpider Chrome extension to your browser. You can grab it from the official Chrome Web Store in about ten seconds.

- Navigate to a Target Page: Head over to any website that displays profile data. Think company "About Us" pages, lists of event speakers, professional network search results, or any online directory.

- Click to Extract: Open the extension and hit the big "Extract Profiles" button.

That's it. There is no step four.

Right away, the AI gets to work. It scans the page, figures out the layout on its own, and pulls all the contacts into a clean, structured list right inside the extension. That single click just replaced the entire old-school process of inspecting HTML, writing scripts, and chasing down bugs.

From Raw Data to Actionable Leads

Once the data pops up, you can use it immediately. Right from the ProfileSpider extension, you can sort contacts into custom lists, enrich them with more details, or export everything to a CSV.

This seamless path from extraction to export means you can have fresh data ready for your CRM or outreach campaigns in minutes, not days. It’s a perfect illustration of how modern AI scrapers are built for business outcomes, not for drawn-out technical projects. For a deeper dive, check out our guide on how to use AI scraper tools to generate sales leads, which builds on these workflows.

When you see it in action, the choice becomes pretty clear for anyone without a developer on standby. It’s about spending less time wrestling with technology and more time actually connecting with the right people.

Got Questions? We've Got Answers.

When people start digging into AI versus traditional scraping, a few key questions always pop up. Let's tackle them head-on, based on what we see professionals asking every single day.

Isn't AI Scraping Just More Expensive?

It’s easy to look at a subscription fee for an AI tool and think it’s pricier, but that’s rarely the whole story. The reality is, the total cost of ownership is often much lower than sticking with old-school scraping methods.

Think about the hidden costs of traditional scraping. You're not just paying a developer once. You're paying them to build the script, then paying them again for maintenance every time a website changes its layout and the scraper breaks. On top of that, you have the lost productivity while your team is stuck waiting for a fix. An AI tool's predictable subscription fee actually replaces all those unpredictable, recurring expenses.

Can AI Scrapers Really Work On Any Website?

This is where AI truly shines. Traditional, rule-based scrapers often hit a wall with modern, JavaScript-heavy websites. They break easily or demand a ton of custom code just to get them working. AI, on the other hand, is built for that complexity. It doesn't just see code; it understands the visual layout of a page, allowing it to adapt to almost any website structure you throw at it.

For instance, a no-code scraping tool like ProfileSpider has no problem with the kind of sites business users need most:

- Professional networks and tricky social media platforms.

- Corporate "About Us" pages and team directories.

- Lists of event speakers and conference attendees.

That kind of adaptability means you can pull leads and profiles from wherever they are, not just the low-hanging fruit on simple, static pages.

How Does AI Scraping Handle Data Privacy?

This is a huge one, and a critical differentiator in the ai scraping vs traditional scraping debate. Any reputable AI tool today is built from the ground up with modern privacy standards in mind, putting you in control of your data.

A lot of old-school scraping setups require you to send your data to some third-party server, which can be a massive security headache. The best modern AI tools take a "local-first" approach, giving privacy-focused businesses a huge advantage.

ProfileSpider, for example, is a local-first tool. What does that mean? All the profile data you extract is stored directly and securely right inside your own browser. It never touches our servers. You—and only you—own and control your data, which is exactly how it should be.