As a recruiter, sales professional, or marketer, you know that valuable data—like leads and candidate profiles—is scattered across countless websites. The challenge is collecting it efficiently. You want to build a simple web scraper with Python to export data to a CSV, but the process can seem technical and time-consuming.

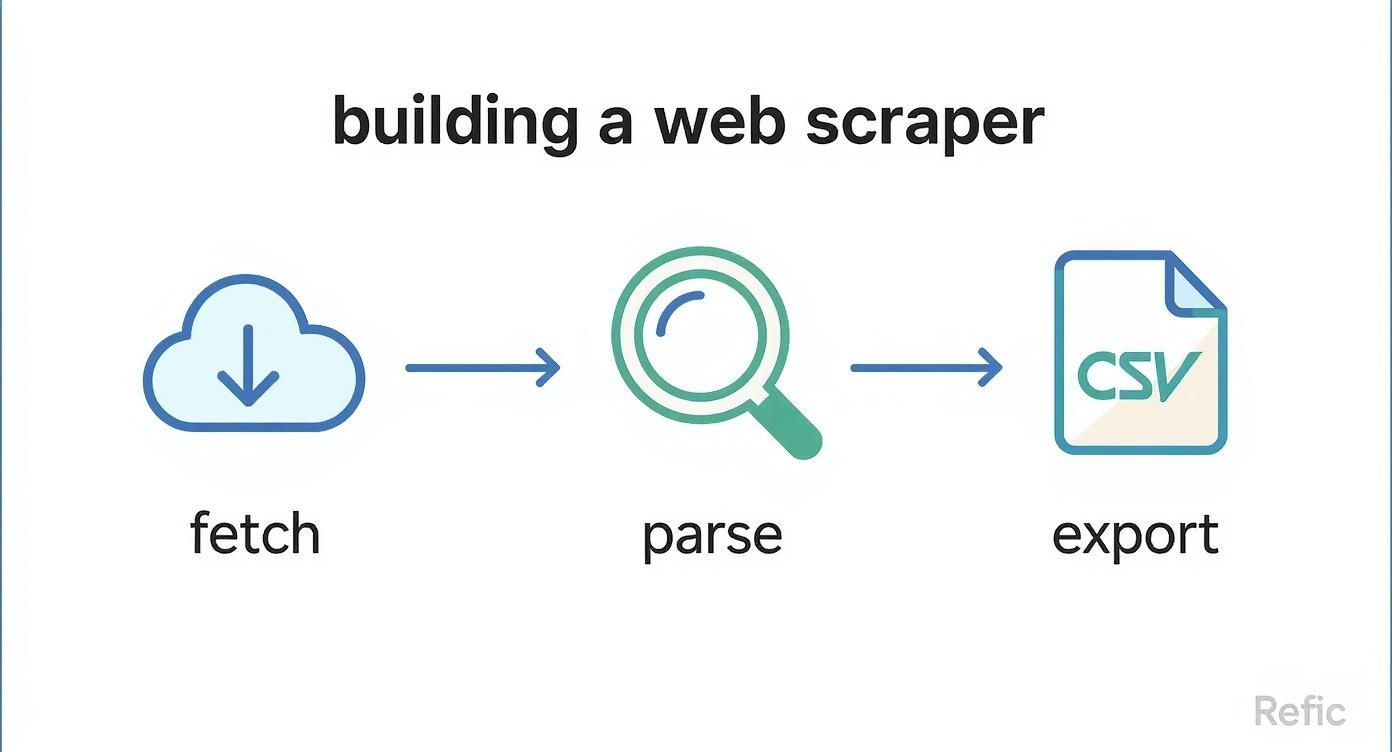

It really boils down to three core steps: you fetch a website's HTML, parse that HTML to find the data you need, and then export that organized information into a clean CSV file.

While the traditional Python method is powerful, it's often a detour from your real job. This guide will walk you through the manual coding process to show you what's involved, and then introduce a modern, one-click alternative designed for professionals who need results, fast.

The Traditional Way: Web Scraping with Python

For a sales professional trying to build a lead list from an industry directory, the goal is simple: turn website data into a usable spreadsheet. The classic Python approach accomplishes this with a few key libraries, but it requires a developer's mindset and a bit of coding.

Understanding this manual process is crucial because it highlights the exact pain points that modern, no-code tools like ProfileSpider are designed to solve. The entire workflow can be broken down into three main actions: Fetch, Parse, and Export.

This visual lays out the basic flow perfectly.

As you can see, scraping isn't just one action. It's a sequence of technical steps, and each one needs the right tool and specific expertise.

The Essential Python Toolkit

One of the biggest reasons Python is a popular choice for web scraping is its ecosystem of libraries built for these exact tasks. For simple, static websites, the go-to trio is almost always requests for fetching the page, BeautifulSoup for parsing the HTML, and pandas for handling the data and exporting to CSV.

You can install them all with a single command: pip install requests beautifulsoup4 pandas. While accessible, it's the first of many technical steps. For a deeper dive, the folks at scrapingbee.com have a great overview of the process.

Here’s a quick look at the core libraries.

Python Web Scraping Toolkit Breakdown

| Library Name | Primary Function | Why It's Used |

|---|---|---|

| requests | HTTP Requests | It acts like a browser, visiting a URL and grabbing the raw HTML source code of the page. |

| BeautifulSoup | HTML Parsing | This tool sifts through the messy HTML to pinpoint and extract the specific data you need. |

| pandas | Data Structuring | It organizes your scraped data into a clean, table-like format that's perfect for exporting to a CSV. |

These three libraries form the foundation of most simple web scrapers. Let's break down what each one does in plain English:

- Requests: Think of this as an automated browser. Its only job is to go to a URL you provide, grab the raw HTML source code of the page, and bring it back for the next step.

- BeautifulSoup: Once you have the HTML, BeautifulSoup is your high-tech search tool. It helps you navigate that complex code to find and pull out the exact bits of information you want—like a person's name, job title, or company.

- Pandas: After you've collected all your data points, Pandas is the organizer. It takes everything you've scraped and structures it into a clean, spreadsheet-style format that can be instantly saved as a CSV file, ready for your CRM or ATS.

Step-by-Step: The Manual Coding Workflow

So, what does it actually take to build a web scraper from scratch? It’s not just writing a few lines of code; it's a multi-step investigation that demands a developer’s eye for detail.

The goal here isn't to turn you into a programmer. It's to show the technical hurdles involved so you can see why a custom-coded scraper isn't always the fastest route for busy professionals who just need data.

Let's walk through the typical workflow for a recruiter trying to source candidates from an online portfolio site.

Step 1: Playing Detective with the Website's HTML

Before writing any code, a developer has to put on their detective hat. They'll open the target webpage—say, a list of conference speakers—and use the browser's "Developer Tools" to inspect the underlying HTML.

This is like studying a building's blueprint. The developer must hunt down the specific HTML tags and class names that contain the data—like an <h2> tag holding a name or a <p class="job-title"> containing a role. This requires a solid grasp of HTML and CSS just to figure out where the information lives.

Step 2: Dealing with Modern Website Roadblocks

Here’s where it gets tricky. Many modern websites use JavaScript to load profile data after the main page is already on your screen. This is a massive roadblock for simple scrapers.

If a developer looks at the initial HTML and the data isn't there, they hit a wall. They need a far more sophisticated tool—one that can pretend to be a real browser, wait for all the JavaScript to run, and then scrape the final content. This one complication can easily double a project’s complexity.

The Developer's Reality: What looks like a simple list of names on your screen is often a complex web of dynamically loaded content. A developer has to account for this from the start, which often doubles the time and effort needed to build a reliable scraper.

Building a simple web scraper with Python to get data into a CSV file is a methodical process. It kicks off with analyzing the target site’s HTML to spot data elements and hurdles like JavaScript rendering. When data loads dynamically, you have to bring in heavier tools like Selenium. If you want to dive deeper into the technical side, the team at Thunderbit's blog breaks down the tools and process in more detail.

Step 3: Writing the Code to Extract the Data

Once the data targets are identified, the coding starts. A developer writes a Python script using libraries like requests and BeautifulSoup to:

- Fetch the Page: Send an HTTP request to the website's server to download the raw HTML.

- Parse the Content: Transform that messy code into a structured, searchable format.

- Extract Specific Data: Write precise commands to find and pull out the text from the HTML tags they identified earlier (e.g., "Find all

<h2>tags with the classspeaker-name"). - Handle Errors: Add logic to manage situations where data is missing, a profile is private, or the page layout changes.

This process is a cycle of trial and error. A tiny design change on the target website can break the entire script, sending the developer back to fix the code. This ongoing maintenance is a hidden cost. For a sales or recruiting professional, a broken scraper means a stalled campaign until a developer can patch it.

Step 4: Turning Scraped Data Into a Usable CSV File

Pulling raw data is only half the battle. If you’re in sales, recruiting, or marketing, that information is useless until it’s organized into a clean, actionable format. This is the final step: turning that jumble of text into a structured CSV file ready for your CRM or spreadsheet.

Developers use Python’s Pandas library for this. It takes all the individual bits of information the scraper collected and arranges them neatly into a table with columns and rows.

Structuring Data for Real-World Use

The goal is to create a dataset you can use right away. This means every row should be a single profile (a lead or candidate), and each column should be a specific data point, like "Name," "Job Title," or "Company."

From a coder’s perspective, the workflow looks like this:

- Gathering the Data: All scraped details are collected into a list of dictionaries, where each dictionary holds one person's profile information.

- Creating a DataFrame: That list is loaded into a Pandas DataFrame, which is basically an in-memory table.

- Cleaning and Normalizing: The code must account for profiles with missing information. If a contact number isn't found, the script needs to insert a placeholder like "N/A" to keep the table's structure intact.

- Exporting to CSV: Finally, with a single command, the DataFrame is exported into a CSV file.

This final file is universally compatible with tools like Microsoft Excel, Google Sheets, and virtually every CRM and Applicant Tracking System (ATS). For a detailed breakdown, see our guide on how to scrape data from a website into Excel.

The Bottom Line for Business: The value of scraped data is tied to how well it's organized. A structured CSV lets you easily filter, sort, and import—essential for building lead lists or candidate pipelines. A messy file just creates more manual cleanup, defeating the purpose of automation.

Going the manual route with code demands precision. A tiny error can lead to misaligned columns, making the export worthless. This is where the simplicity of a no-code tool really shines.

The Modern Alternative: One-Click Scraping with ProfileSpider

We just walked through building a Python scraper. You’ve seen what it takes—inspecting HTML, writing code, debugging errors, and maintaining it.

For recruiters, sales pros, and marketers, that’s a significant detour. Your goal isn’t to become a developer; it's to get the data you need for your job.

This is where ProfileSpider changes the game. It’s a no-code, one-click solution that automates the entire process, letting you focus on results instead of technology.

From Technical Project to One-Click Workflow

Instead of spending hours on a technical project, ProfileSpider shrinks the entire process down to a single click. It does the same thing as a Python script—grabs profile info and saves it to a clean CSV—but it cuts out every single line of code.

There are no libraries to install, no HTML to inspect, and no export logic to write. You just navigate to a page with the profiles you want, open the ProfileSpider extension, and click one button. Its AI-powered engine intelligently finds and extracts complete profiles, capturing names, job titles, companies, locations, and contact info automatically. For more on this trend, see our guide on automating web scraping with no-code tools.

Manual Python Scraping vs. ProfileSpider

For a professional who needs data, the choice comes down to one thing: what's the best use of your time? Let's compare the two methods.

| Feature | Manual Python Scraping | ProfileSpider (No-Code) |

|---|---|---|

| Setup Time | Hours to days (installing Python, libraries, IDE) | Seconds (installing a browser extension) |

| Technical Skill | Requires Python, HTML, and library knowledge | None required, completely intuitive |

| Maintenance | Constant updates needed when websites change | Handled automatically by the ProfileSpider team |

| Speed to Data | Slow; involves coding, testing, and debugging | Instant; one click to extract and save profiles |

| Exporting | Requires writing custom code with Pandas | Built-in one-click export to CSV, JSON, Excel |

| Data Privacy | Data stored in files on your local machine | All data stored securely in your local browser |

The difference is clear. Building a scraper is a technical project; using ProfileSpider is a business solution.

The Professional's Advantage: Your goal isn't to learn how to build a web scraper. Your goal is to get data into a CSV. ProfileSpider gets you to the finish line in seconds, freeing you up for high-value work like outreach, analysis, and closing deals.

Ultimately, ProfileSpider delivers the same result as a custom script but in a fraction of the time and with zero technical friction. It puts powerful, privacy-first data extraction into the hands of the people who need it most.

Why No-Code Scraping Is a Smarter Business Decision

The ability to gather data from the web is no longer a niche technical skill—it's a core part of how modern businesses operate. Sales, marketing, and recruiting teams all run on fresh, real-time information.

While building a simple Python scraper is a great learning exercise, it’s not a scalable solution for business teams. Custom scripts need constant maintenance from developers, creating bottlenecks that slow down entire campaigns. When a target website changes its layout, your Python script breaks. Lead generation grinds to a halt until a developer can fix it.

This is where no-code tools like ProfileSpider offer a massive strategic advantage.

Empower Your Team, Not Your Developers

No-code solutions remove the technical hurdles, empowering the people who actually need the data to get it themselves, instantly.

- Sales teams can build laser-focused prospect lists from LinkedIn or industry directories in minutes, not days.

- Recruiters can source candidate profiles from portfolio sites and job boards without filing a ticket with the IT department.

- Marketers can pull influencer data for outreach campaigns whenever they need it.

When you put data collection tools directly into the hands of your team, you slash your dependency on developers and accelerate your entire business cycle.

Gaining Speed, Control, and Privacy

Beyond speed, modern no-code tools are built with data privacy and user control in mind. While a custom script might dump files onto your computer, ProfileSpider stores all extracted data locally in your browser using IndexedDB.

This is a huge benefit. No profile information is ever sent to an external server without your consent. This gives you complete control over the data you collect and helps you stay compliant with regulations like GDPR.

This blend of speed, accessibility, and privacy is why no-code is becoming essential. It's a trend that goes far beyond web scraping; read more about the growing impact of no-code solutions in professional environments. For professionals in sales, recruiting, and research, adopting a no-code approach means spending less time fighting with technology and more time getting results.

Frequently Asked Questions

When it comes to web scraping, a few common questions always come up. Here are the answers you need.

Is It Legal to Scrape Data from Websites?

This is the big one. Generally, scraping publicly available data is legal, but you have to follow the rules. Always check a website's terms of service and its robots.txt file, which tells bots what's off-limits. Scraping personal data or anything behind a login is almost always prohibited. For professionals gathering public profile data for outreach, the practice is standard, but it's crucial to be responsible. Learn more in our detailed guide on the legalities of website scraping.

How Do I Handle Websites That Block Scrapers?

Many sites use techniques like CAPTCHAs or IP rate limiting to block automated tools. With a Python script, you'd need to write complex code to manage proxies and mimic human behavior to avoid detection—a major technical headache. ProfileSpider is designed to handle this. Because it runs as a browser extension, its activity appears more like a real user, significantly reducing the risk of being blocked.

Can I Scrape Data from Social Media Sites Like LinkedIn?

Scraping social media is tricky and almost always violates their terms of service. Running a large-scale, automated script is a surefire way to get your account banned. A tool like ProfileSpider provides a smarter, safer approach. Instead of running an aggressive background script, it helps you extract profile information from pages you are actively viewing, allowing you to collect data for lead generation or recruiting without raising red flags.

What Is the Main Advantage of a No-Code Tool Over a Python Script?

It all comes down to efficiency and focus. As a recruiter, marketer, or sales professional, your job is to use data, not spend days debugging a script. A no-code tool like ProfileSpider transforms what would be a multi-day coding project into a single click.

It eliminates the need for setup, coding, and maintenance. This shift saves hundreds of hours, allowing you to focus on what actually drives revenue and growth: using data to connect with people and grow your business.