For anyone building a list, one of the most frustrating moments is when your reliable data scraper, which functioned perfectly the day before, suddenly returns nothing. You might encounter an empty table, a spinning wheel that never stops, an "export returns empty CSV" message, or a "No Data Found" error. Your workflow comes to a standstill, leaving you to puzzle over what went wrong.

If you're using a straightforward scraper like Instant Data Scraper, DataMiner, or WebScraper.io, this scenario is quite common. These tools are excellent for quick data extractions but are also prone to issues. Scrapers often fail because websites are constantly evolving. A small change to a site's HTML structure, a move to dynamic content, or new anti-scraping measures can quickly render your scraper ineffective.

This guide is designed for non-technical users—such as recruiters, sales representatives, and researchers—who need a swift solution. We'll explain why your scraper failed, provide nine simple troubleshooting steps, and suggest a dependable alternative to help you obtain the data you need immediately.

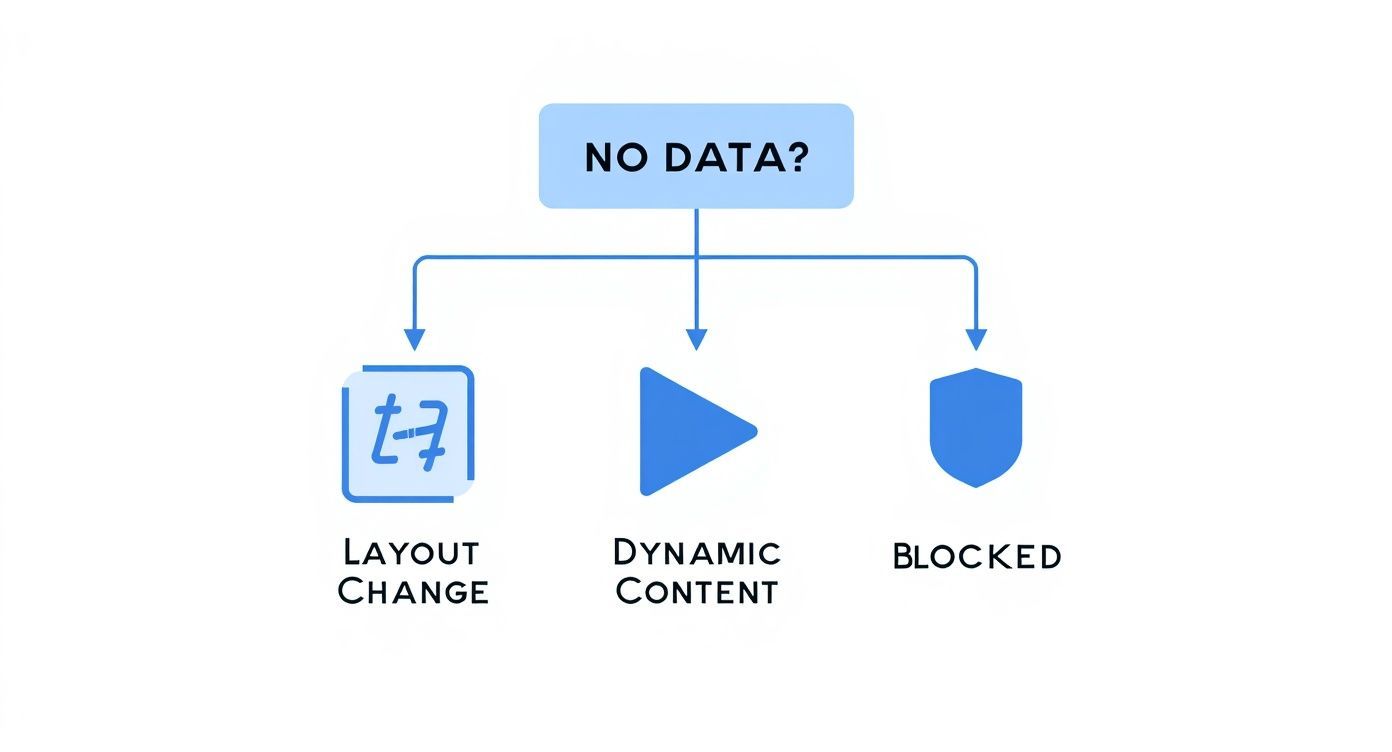

Why Your Data Scraper Suddenly Stopped Working

Simple data scrapers function by following a specific "map" of a website's code (HTML and CSS) to locate and extract information. When a website's developers modify this map, even slightly, your scraper can become ineffective. Here are the most common reasons your data scraper is not working:

Website Layout Changes: This is the primary reason. Developers frequently update their sites, altering the CSS classes or HTML structure your scraper relies on. The data remains, but your tool can no longer locate it.

Dynamic Content (JavaScript): Many modern websites use JavaScript to load data after the page initially appears (such as "infinite scroll" lists). If your scraper runs too quickly, it captures the page before the content has loaded, resulting in an empty file.

Unsupported Page Types: General-purpose scrapers are intended for simple tables or lists. They often struggle with modern pages that have complex layouts, such as card-based profile views on social networks or directories.

Expired Cookies or Login Sessions: If you're scraping a site that requires a login, your session might expire in the background. Your scraper loses access and can no longer retrieve the data.

Rate Limits or IP Blocking: The website may have detected automated activity and temporarily blocked you to prevent data extraction. This often occurs if you try to gather too much data too quickly.

Outdated Scraper Extension: If your scraper extension hasn't been updated recently, it may no longer be compatible with the latest version of your browser or the websites you're targeting.

Conflicts with Other Extensions: Another Chrome extension could be interfering with your scraper's ability to function correctly.

Browser Update Changed Extension API: Recent updates to the browser may have altered the extension API, affecting your scraper's performance.

Chrome Version Mismatch: Discrepancies between your current Chrome version and the one required by your scraper extension could lead to malfunctioning.

9 Practical Fixes to Try Right Now

Before giving up, consider these straightforward troubleshooting steps. Often, one of these solutions will restore your scraper's functionality.

Fully Scroll the Page First: Many websites employ "lazy loading" or "infinite scroll." Manually scroll to the bottom of the page to ensure all desired data is visible in your browser before running the scraper.

Refresh and Re-Login: A classic remedy. Your login session might have expired. Log out completely, log back in, and attempt scraping again. A hard refresh (Ctrl+Shift+R or Cmd+Shift+R) may also be beneficial.

Update the Scraper Extension: Visit your browser's extensions management page (

chrome://extensions/) to see if an update is available for your scraper. Developers release updates to accommodate website changes.Test in an Incognito Window: Open an Incognito or Private window, which usually disables other extensions by default. If the scraper functions here, the issue may be a conflict with another extension. Disable your other extensions one by one to identify the cause.

Reinstall the Scraper Extension: If other steps fail, uninstalling and reinstalling the scraper can clear corrupted settings. Note that this might delete any saved scraping rules or configurations.

Reduce the Amount of Data: Scraping thousands of rows at once might hit the website's rate limits. Try scraping a smaller, manageable amount of data first (e.g., the first 50 rows).

Disable Other Extensions: Temporarily disable all other browser extensions except your scraper. Extensions, such as ad blockers or security tools, might interfere with how a page's content loads.

Clear Your Browser Cache: Your browser might retain an outdated or broken version of a page. Clear the cache and cookies for the specific site you're scraping to load the most recent version.

Wait and Try Again Later: If you suspect you've been rate-limited or temporarily blocked, waiting 30-60 minutes and trying again with a smaller, slower scrape often resolves the issue.

Check for Website Redesign: Websites frequently update their layouts or structures, which can impact scraping. Verify if the site has undergone any redesigns that might require adjustments to your scraper settings.

Why Some Pages Just Can't Be Scraped with Simple Tools

Despite trying various solutions, your scraper continues to yield no results. It might be time to realize that the issue isn't with your tool—it's with the website itself.

Many modern websites are constructed in ways that inherently challenge traditional, table-based scrapers. These sites frequently employ complex JavaScript frameworks (like React or Vue.js) that render content dynamically. The data visible on the screen is not present in the straightforward HTML that your scraper initially loads.

These difficult-to-scrape pages often feature:

Complex, nested HTML structures.

Card-based layouts instead of simple rows and columns.

Data that only appears after a button is clicked or an element is hovered over.

Advanced anti-bot protections that can detect and block automated tools.

Examples of such page types include:

LinkedIn profile cards

Infinite scroll lists

Dynamic job boards

Encountering a page like this can make using a simple scraper feel as futile as trying to fit a square peg into a round hole. Recognizing the tool's limitations and opting for a more suitable alternative can save you hours of frustration.

Alternatives to Use When Your Scraper is Broken

When you're on a tight deadline and need data immediately, troubleshooting a malfunctioning tool is not an option. Here are some effective alternatives to quickly achieve your data extraction goals.

ProfileSpider: This tool is particularly suited for users with minimal technical expertise who need to gather profile or contact details. ProfileSpider employs artificial intelligence to automatically detect and extract data from any website without requiring any configuration. It offers a straightforward, one-click solution that effectively works on complex and dynamic pages where other scrapers may encounter difficulties. This makes it especially useful for recruiters, sales teams, and researchers who need efficient data extraction without technical hassles.

Octoparse: A more advanced visual scraping tool capable of dealing with intricate websites. Although it requires a bit more time to learn compared to a simple browser extension, Octoparse provides pre-built templates and a user-friendly, point-and-click interface. This allows users to create scraping "recipes" without needing to write any code, making it accessible for those willing to invest some time in understanding its functionalities.

ParseHub: This is another strong desktop application well-suited for extracting data from sites that heavily rely on JavaScript. With ParseHub, users can select the data they wish to extract through a visual interface. It also supports navigating between multiple pages, opening drop-down menus, and managing login credentials, providing a comprehensive scraping solution for complex websites.

When your main data scraper is malfunctioning, having a straightforward and dependable backup like ProfileSpider can be extremely helpful. This transforms a potential obstacle into a small setback, enabling you to gather the necessary leads, candidates, or research data and quickly return to your core tasks.

FAQ Section

Q: Why does my data scraper export empty rows?

A: This issue often arises due to changes in the website's structure or incorrect selector configurations in your scraper settings.

Q: How often do websites change their layout?

A: Websites can update their layouts frequently, sometimes even daily, depending on the site's maintenance schedule and objectives.

Q: Is there a way to avoid scraper failures in the future?

A: Regularly updating your scraper settings and monitoring changes in website structures can help minimize failures.