It's the moment every sales professional, recruiter, or marketer dreads. The web scraper that was pulling in fresh leads yesterday is suddenly dead in the water. One minute, you have a reliable data stream for your outreach campaigns; the next, you're staring at an error message or, worse, an empty spreadsheet.

This isn't just a technical glitch. It’s a direct result of how the modern web works, and for business users, it almost always boils down to one of two culprits: the website's layout changed, or the site actively blocked your tool.

Understanding Why Your Scraper Suddenly Fails

Websites are constantly evolving. Developers are always shipping new features, patching security holes, or improving the user experience. For professionals who depend on data extraction to build lead lists and find candidates, this constant flux creates a huge headache.

Before diving into the technical reasons, let's look at the common symptoms you'll encounter. This table breaks down the most frequent problems and what they mean for your workflow.

Quick Diagnosis of Common Scraper Failures

| Problem Area | Common Symptom | What It Means for Your Business |

|---|---|---|

| Website Structure | Scraper returns empty fields, partial data, or errors out completely. | The website's layout changed, and your scraper's "map" is now outdated, leading to incomplete or useless lead lists. |

| Anti-Bot Defenses | You're hit with CAPTCHAs, error pages, or your IP gets blocked. | The website has identified your tool as a bot and is actively shutting you down, halting lead generation. |

| Authentication/Session | The scraper can't get past a login wall it used to handle. | The login process changed, or your session cookies are no longer valid, cutting off access to valuable profile data. |

| Rate Limiting | Your scraper works for a bit, then starts getting errors. | You're making too many requests too quickly, and the server is throttling your connection, resulting in partial data. |

Recognizing these symptoms is the first step. Now, let's explore the two biggest issues in more detail.

The Two Core Reasons Your Scraper Broke

Most scraper problems, especially for non-developers, fall into two main buckets. Understanding these will help you diagnose the issue without needing to write a single line of code.

The Goalposts Moved (Website Structure Changes): Think of a traditional scraper as having a rigid map to find data on a page. It's been told to go to a specific spot, marked by a particular HTML tag, to grab a job title or a company name. But when a site developer changes something—even a seemingly tiny detail like renaming a class from

profile-nametouser-name—that map becomes useless. The scraper shows up to the old spot, finds nothing, and breaks.The Bouncer Kicked You Out (Active Anti-Bot Defenses): Websites with valuable profile data, like professional networks, don't just sit back and let scrapers run wild. They employ sophisticated systems to tell the difference between a human user and a bot. If your scraper is making requests too fast or in a predictable pattern, these systems flag it as suspicious and shut it down. This can result in your IP address being blocked, either temporarily or for good. If you've ever had an account locked down after trying to pull data, you've seen this in action. Many have learned this the hard way when LinkedIn restricted their account for scraping.

Key Takeaway: A scraper that stops working is a symptom of a dynamic web. Either the digital goalposts have moved (structure change), or the gatekeeper has kicked you out (anti-bot measures).

For sales and recruiting professionals, the goal isn't to become a developer who can constantly fix broken code. The goal is to get reliable data without the technical headache. This is where modern, no-code scraping tools make a significant difference. An AI-powered tool like ProfileSpider doesn’t rely on rigid maps. Instead, it’s trained to recognize data patterns—like what a name, job title, or company looks like—making it far more resilient to minor website changes and more effective at delivering the leads you need.

A Non-Technical Guide to Diagnosing the Issue

So, your web scraper is broken. Before you can think about a fix, you have to play detective. Figuring out exactly why it failed is the most important step, and the good news is you don’t need to be a developer to get to the bottom of it.

The first and easiest test? Try visiting the website yourself in an incognito or private browser window. This makes the site see you as a brand-new visitor. If you’re immediately hit with a CAPTCHA puzzle or a blunt "Access Denied" page, you’ve found your culprit. That’s a clear sign that the site’s anti-bot defenses have kicked in.

But if the page loads just fine, it’s time to peek under the hood. Don’t worry, it’s easier than it sounds.

Using Browser Developer Tools

Every modern browser has a set of "Developer Tools" built right in. You can usually open them by right-clicking anywhere on the page and selecting "Inspect." The wall of code might look intimidating, but we’re only looking for one or two specific clues.

Find the tab labeled "Network," click it, and then refresh the webpage. You'll see a running list of everything the page is loading. Keep an eye out for any items that show up in red. If you see status codes like 403 Forbidden or 429 Too Many Requests, that’s a clear sign the server is actively blocking your scraper.

Checking the Page Source

Another common trap, especially with modern websites, is that the data you want simply isn't there when the page first loads. Many sites use JavaScript to pull in profile details after the main page structure is already visible.

To check for this, right-click on the page and choose "View Page Source." This shows you the raw, initial HTML the server sent. Now, just use your browser's find function (Ctrl+F or Cmd+F) to search for a piece of data you were expecting to scrape, like a name or a job title. If you can see it on the live page but can't find it anywhere in the source code, that’s your answer. Your scraper is likely failing because it can't process a dynamic, JavaScript-heavy site. Any instant data scraper needs the information to be present in that initial HTML to grab it.

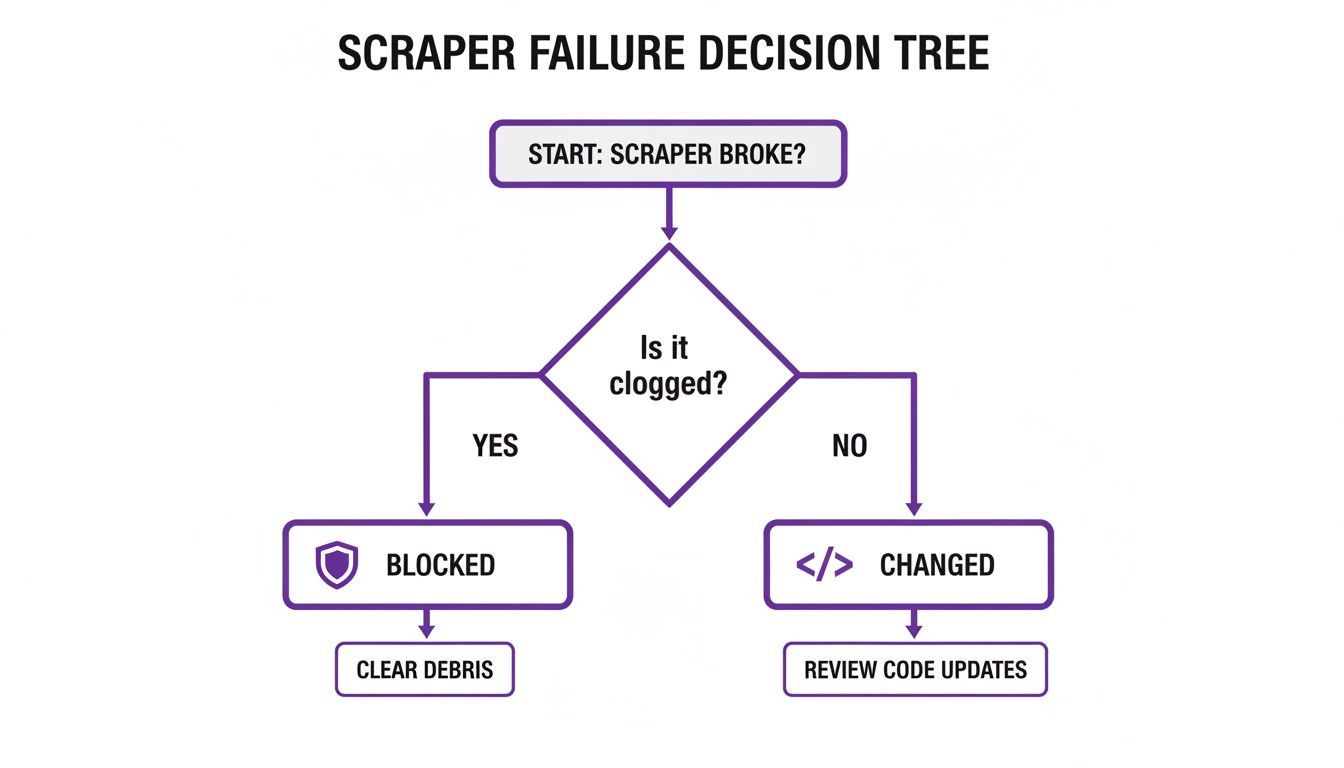

This flowchart can help you visualize the process when your scraper stops working, showing whether a block or a site change is the likely problem.

It really boils down to two main possibilities: either the website’s security is actively blocking you, or the site’s layout has changed, breaking the logic your tool was relying on.

When a scraper suddenly dies, anti-bot measures are almost always the reason. Industry reports show these defenses cause the vast majority of failures. Some sources even estimate that over 90–95% of scraper failures come from CAPTCHAs, IP bans, and behavioral tracking, not simple coding or network errors. You can dig into more web crawling stats over at Thunderbit.

Overcoming Modern Anti-Scraping Defenses

If your diagnosis led you to anti-bot measures, then you’ve hit the most common culprit for a broken web scraper. Sites with valuable lead or profile data are in a constant tug-of-war with automated traffic, and your tool just got caught in the crossfire.

It might sound technical, but the core ideas are straightforward. Websites look for signals that separate a real person from a bot. When a tool sends too many requests too quickly or just acts in a predictable, robotic way, it gets flagged.

And that’s when the digital gates slam shut.

Common Anti-Bot Tactics Explained

You don't need to be a developer to understand what's going on. For most sales and marketing professionals, the roadblocks you hit will usually fall into a few familiar categories:

- IP Address Blocking: This is the oldest trick in the book. If a server sees a flood of activity from a single IP address, it just blocks all future requests from it. This is why so many cloud-based scrapers that run from obvious data centers get spotted and shut down almost immediately.

- CAPTCHA Challenges: The classic "I'm not a robot" checkbox or those annoying picture puzzles are designed specifically to stop automated scripts. If your scraper can't solve it, it’s not getting through.

- Behavioral Analysis: More sophisticated systems actually watch how you browse—tracking mouse movements, clicking patterns, and even how fast you navigate. A scraper that jumps from page to page instantly without any human-like pauses raises serious red flags.

The Professional's Dilemma: You're not a hacker trying to bring the site down; you're just trying to gather contacts for your business. But to the website, your tool looks like a malicious bot. This is the fundamental challenge that traditional scraping tools just can't solve on their own.

The No-Code Solution to Bypass Blocks

While a developer might dive into a complex world of rotating residential proxies or CAPTCHA-solving APIs, that’s overkill—and way too technical—for most business users. For targeted lead generation, the fix is often much simpler: use a tool that acts more like a person.

This is where a modern, no-code tool like ProfileSpider completely changes the game. Instead of running from a suspicious server in a data center, it operates right inside your own Chrome browser. That one difference is a massive advantage.

By working as an extension, ProfileSpider uses your existing, trusted browser session. To the website's server, its activity just looks like you—a logged-in user—navigating the site normally. It uses your own IP address and cookies, which naturally sidesteps the server-side detection that trips up so many other tools. This is a critical distinction, especially on platforms that are famously tough to scrape. You can learn more by exploring why Chrome extensions get blocked on LinkedIn and how a local-first approach helps avoid those issues.

For really tough scraping jobs, you might need to look into more advanced LinkedIn scraping strategies to understand the platform's unique quirks. But for most day-to-day lead generation, the goal isn't to fight the website—it's to work with it. ProfileSpider’s one-click, AI-powered extraction is designed to do just that, turning a technical arms race into a simple, efficient workflow.

Extracting Data from Dynamic Websites

Ever had a scraper that worked perfectly one day and failed the next, even though the website looks exactly the same? It's a maddeningly common problem, and it often has nothing to do with being blocked.

The real culprit is often invisible. Your scraper might be arriving at the page too early, before the most important information—the data you need—has even loaded.

Think of it like this: a simple scraper takes a quick snapshot of a webpage's initial HTML code. But many modern sites don't load everything at once. They serve up a basic skeleton and then use JavaScript to pull in the important stuff—like contact info, product lists, or profile data—a split second later. If your scraper only reads that initial code, it’s going to leave with empty hands.

The Challenge of JavaScript-Powered Content

This is especially true for sites built with modern frameworks like React or Angular. They create a smooth, seamless experience for human visitors, but they throw a massive wrench into the works for basic data extraction tools. The scraper looks at the raw HTML and sees a bunch of placeholders, not the final, rendered data that you’re looking at in your browser.

This isn't a rare issue; it's a huge reason why scrapers break. A growing number of websites render their most valuable data on the client-side. This means that a scraper that can't execute JavaScript just like a real browser will miss the boat entirely.

The Key Insight: Your scraper isn’t broken; it’s just blind to dynamically loaded content. It’s like trying to read a letter written in invisible ink without the special light.

Using a Tool That Sees the Whole Picture

So, what's the fix? You need a tool that can fully render the page—JavaScript and all—before it even thinks about extracting data.

In the past, this was a massive technical headache. It meant setting up complex "headless browsers" and writing custom code, a task that’s way beyond the reach of most sales, marketing, and recruiting professionals. If you're curious what that world looks like, our guide on how to build a simple web scraper with Python can give you a peek behind the curtain.

Thankfully, you don't have to be a developer anymore.

Modern, no-code tools are built to handle this automatically. A browser-based extension like ProfileSpider, for instance, works directly inside your live browser. It doesn't need to simulate a browser because it is the browser. It sees the final, fully-loaded page exactly as you do.

Because it operates as a Chrome extension, there’s zero special configuration needed to handle dynamic sites. ProfileSpider just waits for the page to finish loading everything and then lets its AI identify and pull the profiles you need. Understanding the basics of modern web application architecture helps explain why this is so effective. It turns a complex rendering problem into a simple, one-click workflow.

Avoiding Silent Failures and Bad Data

A web scraper that just stops working is a pain. But a scraper that seems to work fine while feeding you inaccurate data? That’s a much bigger business problem.

This is what we call a “silent failure.” It’s when your tool runs and reports success, but silently pulls incomplete, wrong, or duplicated information without ever throwing an error.

Think about it. If you’re a sales pro building a lead list or a recruiter sourcing candidates, this kind of bad data is often worse than no data at all. It pollutes your CRM, wastes your team's time on outreach that goes nowhere, and slowly kills trust in your entire data-driven process.

Imagine your scraper is working through a list of 25 contacts on a page and hits a rate limit halfway down. It might only grab the first 10 profiles but still report the job as "complete." You would have no idea you just missed out on more than half the leads on that page.

The Real Cost of Bad Data

This isn't just a technical glitch; it's a major business risk. When scrapers fail intermittently because of temporary blocks or small layout changes on a site, the quality of your dataset tanks.

Industry watchers have pointed out that in poorly managed scraping setups, it’s not uncommon for over 25–30% of scraped records to be duplicated, incomplete, or just plain wrong. You can read more about the impact of silent failures on World Business Outlook.

This kind of data rot creates very real problems:

- Wasted Time: Your team ends up manually cleaning messy lists or trying to contact people who don't exist.

- Damaged Reputation: Nothing says "spam" like sending a poorly targeted message based on incorrect data. It can seriously harm your brand's credibility.

- Flawed Strategy: If you're making decisions based on incomplete information, you're flying blind.

How AI-Powered Tools Solve This Problem

This is where modern, AI-driven tools have a massive advantage over older, selector-based scrapers.

A traditional scraper is rigid. It relies on a fixed "map" of a website's code. If the site changes one tiny element—like a class name on a button—the scraper can easily start grabbing data from the wrong field. You might end up with a company's city listed in the "Job Title" column, and the scraper would be none the wiser.

A brittle, code-based scraper is like an old assembly line robot programmed to pick up a specific bolt. If the bolt's shape changes even slightly, the robot might grab the wrong part or nothing at all, sending defects down the line without ever sounding an alarm.

An AI-driven tool like ProfileSpider works completely differently. It doesn’t just follow a rigid map; it has been trained to understand the context of the data it’s looking at.

It knows what a person's name, job title, or company profile looks like, even if the website's layout changes. This contextual understanding dramatically cuts down the risk of pulling mismatched or incorrect information. By focusing on what the data means, ProfileSpider helps you sidestep the silent failures that can quietly sabotage your lead generation efforts.

Your Top Scraping Questions, Answered

Even when you have the right tool, data extraction can be tricky. When things break, it’s natural to have questions. Here are some straight answers to the most common issues sales pros and recruiters run into.

How often will my scraper break?

If you're using a traditional, code-based scraper, expect it to break often. The target website might tweak its layout—sometimes weekly, sometimes even daily—and your scraper will stop working. This constant cycle of fixing and refactoring is a massive time-drain, especially if you're not a developer.

This is where a modern, AI-powered tool like ProfileSpider really changes the game. Instead of relying on rigid code that breaks with every little change, it’s trained to recognize data patterns. It knows what a name looks like or how a job title is structured, so it can adapt on the fly. This slashes the need for constant maintenance and keeps the data flowing reliably.

Is it okay to scrape public data?

Generally, yes, collecting publicly available data for business purposes is fine, but the key is to do it ethically and responsibly. Think of it like being a good guest. You need to respect the website's terms of service, steer clear of personal data that falls under regulations like GDPR, and avoid hammering the site's servers with aggressive requests.

Tools designed for specific professional use cases, like ProfileSpider, are built to keep you on the right side of that line. The goal is targeted lead generation or recruiting, not carpet-bombing a site for every piece of data it holds. This approach keeps things professional and avoids disruptive, large-scale scraping.

My scraper is blocked. Will a VPN help?

Sometimes, but it’s usually a temporary fix at best. If your personal IP address gets flagged and blocked, a VPN can get you back in by masking your location. The problem is, this is a short-term patch. Most major websites have gotten very good at identifying and blocking traffic from known commercial VPN services. You’ll just end up in a cat-and-mouse game.

For the kind of targeted work most professionals are doing, a browser-based tool like ProfileSpider is a much simpler and more stable solution. It operates within your own trusted browser session, using your existing IP address. This approach often sidesteps the whole IP-blocking issue from the start, letting you gather the business data you need without technical gymnastics.

My Two Cents: While a VPN has its uses, it's rarely a silver bullet for scraping issues. Leveraging your natural browser activity with a smart extension is a far more reliable way to avoid common anti-bot defenses without a lot of extra work.

Why not just hire a developer to build a scraper?

For most business professionals, the goal is getting actionable data—not starting a new software development project. Bringing in a developer to build a custom scraper might sound like the ultimate solution, but it’s slow, expensive, and creates a dependency. Every time the target site changes, you’re back on the phone with your developer, waiting (and paying) for a fix.

A no-code tool like ProfileSpider puts you in the driver's seat. It’s a one-click solution that completely removes the technical overhead. You get the data you need without the development headaches, allowing you to focus your time and energy on what actually moves the needle: connecting with leads, finding great candidates, and growing your business.